※1 、软件环境

应用版本

Server:

公网IP:

内网IP:192.168.167.33

Zookeeper:

软件包版本:apache-zookeeper-3.6.3-bin.tar.gz

软件路径:/usr/local/zookeeper

Hadoop:

软件包版本:hadoop-3.3.1.tar.gz

软件路径:/usr/local/hadoop

Hbase:

软件包版本:hbase-2.4.9-bin.tar.gz

软件路径:/usr/local/hbase

※2、安装Hadoop此处采用Hadoop伪分布式安装模式(单节点)

安装前的准备

永久更改主机名hostname为master-node

更改主机host文件

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.167.33 master-node

hostnamectl set-hostname master-node --static

2-1、JDK安装 或者参考:JDK安装

JDK安装

#上传jdk的包 143/data/soft/jdk-8u251-linux-x64.rpm

rpm -ivh jdk-8u251-linux-x64.rpm

#环境配置

vim /etc/profile配置

export JAVA_HOME=/usr/java/jdk1.8.0_251-amd64

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tool.jar

#刷新变量

source /etc/profile

#关闭SELINUX

setenforce 0 >/dev/null 2>&1

sed -i 's/^SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

sed -i 's/^SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

#查看关闭SELINUX

sestatus

2-2、正式安装

Hadoop安装

cd /usr/local/ && wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.3.1/hadoop-3.3.1.tar.gz

#解压缩

tar -xzvf hadoop-3.3.1.tar.gz

#重命名

mv hadoop-3.3.1 hadoop

#配置环境变量:将以下几行添加到配置文件末尾,保存退出

vim /etc/profile

export HADOOP_HOME=/usr/local/hadoop/

export LD_LIBRARY_PATH=$HADOOP_HOME/lib/native

export HADOOP_COMMON_LIB_NATIVE_DIR=/usr/local/hadoop/lib/native

export HADOOP_OPTS="-Djava.library.path=/usr/local/hadoop/lib"

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

#刷新环境变量

source /etc/profile

hadoop version #测试是否配置成功

################出现下面的状态是成功

Hadoop 3.3.1

Source code repository https://github.com/apache/hadoop.git -r a3b9c37a397ad4188041dd80621bdeefc46885f2

Compiled by ubuntu on 2021-06-15T05:13Z

Compiled with protoc 3.7.1

From source with checksum 88a4ddb2299aca054416d6b7f81ca55

This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-3.3.1.jar

################

修改hadoop的5个配置文件

#1、vim /usr/local/hadoop/etc/hadoop/hadoop-env.sh #添加如下一行变量

export JAVA_HOME=/usr/java/jdk1.8.0_251-amd64

#2、vim /usr/local/hadoop/etc/hadoop/core-site.xml #HADOOP-HDFS系统内核文件)

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://0.0.0.0:9000/hbase</value>

<description>The default file system URI</description>

</property>

<!--HADOOP TMP-->

<property>

<!--这是配置hadoop持久化的目录-->

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/data</value>

</property>

</configuration>

#3、vim /usr/local/hadoop/etc/hadoop/mapred-site.xml

<configuration>

<!--on yarn-->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

#4、vim /usr/local/hadoop/etc/hadoop/yarn-site.xml ###记得更换IP

<configuration>

<!-- Site specific YARN configuration properties -->

<!-- YARN (ResourceManager)-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>192.168.179.131</value>

</property>

<!-- reducer-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

#5、vim /usr/local/hadoop/etc/hadoop/slaves #新增

localhost

#解决互信问题

cd /root/

ssh-keygen -t rsa

ssh-copy-id -i .ssh/id_rsa root@0.0.0.0

ssh-copy-id -i .ssh/id_rsa root@localhost

ssh-copy-id -i .ssh/id_rsa root@master-node

######

ssh-copy-id -i .ssh/id_rsa root@0.0.0.0

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: ".ssh/id_rsa.pub"

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

ECDSA key fingerprint is SHA256:4J4i1IaGL4b7Bb42kBXzV7FFMmHYjR090z+6juNzkZ4.

ECDSA key fingerprint is MD5:73:f1:95:60:ae:a4:39:82:30:86:d3:fd:37:05:a8:50.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@0.0.0.0's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@0.0.0.0'"

and check to make sure that only the key(s) you wanted were added.

######

ssh root@0.0.0.0

Last login: Wed Dec 22 13:58:06 2021 from 192.168.167.1

###

#启动Hadoop节点

hdfs namenode -format #中间没有报错并且最后显示如下信息表示格式化成功

...

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master-node/192.168.167.1

************************************************************/

格式化完成后,系统会在dfs.data.dir目录下生成元数据信息。

#目录在/home/hadoop/data/dfs/name/current#

输入 start-all.sh 启动

报错

1、

############### 大致意思是没有在配置文件中定义用户 如果不是root启动的 还需要指定用户

Starting namenodes on [0.0.0.0]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [master-node]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

2021-12-22 16:39:30,980 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting resourcemanager

ERROR: Attempting to operate on yarn resourcemanager as root

ERROR: but there is no YARN_RESOURCEMANAGER_USER defined. Aborting operation.

Starting nodemanagers

ERROR: Attempting to operate on yarn nodemanager as root

ERROR: but there is no YARN_NODEMANAGER_USER defined. Aborting operation.

##############

vim /usr/local/hadoop/sbin/start-dfs.sh ###增加

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

vim /usr/local/hadoop/sbin/stop-dfs.sh ###增加

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

vim /usr/local/hadoop/sbin/start-yarn.sh ###增加

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

vim /usr/local/hadoop/sbin/stop-yarn.sh ###增加

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

重新执行

cd /usr/local/hadoop/sbin && ./start-all.sh

###结果

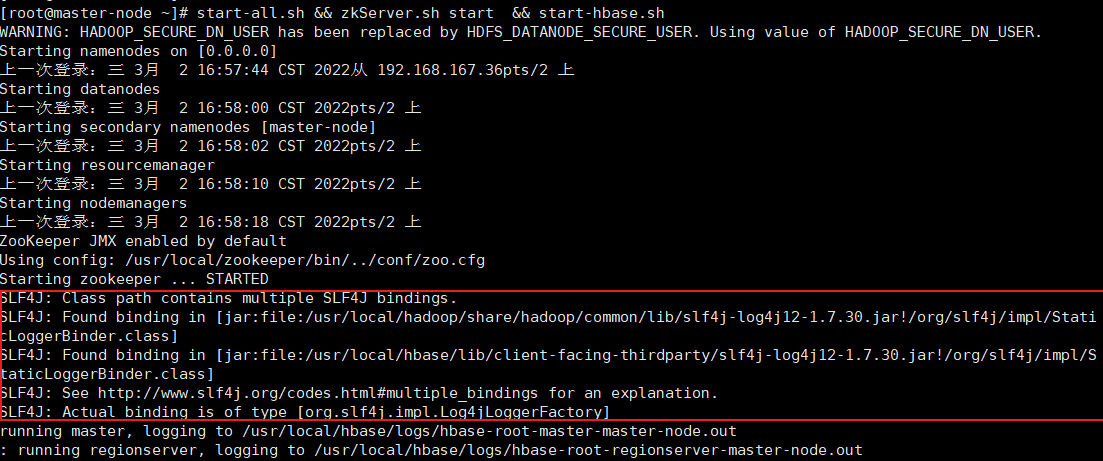

WARNING: HADOOP_SECURE_DN_USER has been replaced by HDFS_DATANODE_SECURE_USER. Using value of HADOOP_SECURE_DN_USER.

Starting namenodes on [0.0.0.0]

上一次登录:三 12月 22 16:57:48 CST 2021从 192.168.167.1pts/2 上

Starting datanodes

上一次登录:三 12月 22 17:06:03 CST 2021pts/2 上

Starting secondary namenodes [master-node]

上一次登录:三 12月 22 17:06:06 CST 2021pts/2 上

2021-12-22 17:06:20,555 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting resourcemanager

上一次登录:三 12月 22 17:06:12 CST 2021pts/2 上

Starting nodemanagers

上一次登录:三 12月 22 17:06:22 CST 2021pts/2 上

######

2、不管是启动还是关闭hadoop集群,系统都会报如下错误:

WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

解决方案

vim /usr/local/hadoop/etc/hadoop/log4j.properties #在文件末尾添加如下一句,保存退出

log4j.logger.org.apache.hadoop.util.NativeCodeLoader=Error

3、端口问题

HDFS管理界面(NameNode):http://192.168.167.33:50070

登录MR管理界面: http://192.168.167.33:8088

#8088端口能访问的到 50070不能原因是:

Hadoop2.x的namenode界面访问端口默认是:50070

Hadoop3.x的namenode界面访问端口默认是:9870

通过修改hdfs-site.xml中dfs.namenode.http-address参数来修改:

vim /usr/local/hadoop/etc/hadoop/hdfs-site.xml ##增加

<configuration>

<property>

<name>dfs.namenode.http-address</name>

<value>0.0.0.0:50070</value>

</property>

</configuration>

stop-all.sh #关闭

start-all.sh #启动

#执行 jps 验证集群是否启动成功 #显示以下几个进程说明启动成功

11824 NameNode #可有可无

15536 Jps

12481 ResourceManager #非常重要

12610 NodeManager #可有可无

12183 SecondaryNameNode #重要

11963 DataNode #可有可无

※3、安装Zookeeper此处采用单机模式

Zookeeper安装

cd /usr/local/ && wget https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.6.3/apache-zookeeper-3.6.3.tar.gz

#解压缩

tar xzvf apache-zookeeper-3.6.3-bin.tar.gz

#重命名

mv apache-zookeeper-3.6.3-bin zookeeper

#添加环境变量

vim /etc/profile

export ZOO_HOME=/usr/local/zookeeper

#刷新环境变量

source /etc/profile

#配置zookeeper。将配置模板生成为配置文件,生成dataDir目录,修改参数

cd /usr/local/zookeeper/conf && ls

configuration.xsl log4j.properties zoo_sample.cfg

#生成配置文件

cp zoo_sample.cfg zoo.cfg

#生成dataDir目录

mkdir -p /usr/local/zookeeper/data && mkdir -p /usr/local/zookeeper/data/log

#添加如下参数

vim zoo.cfg

dataDir=/usr/local/zookeeper/data #已有,修改即可

dataLogDir=/usr/local/zookeeper/data/log

server.1=0.0.0.0:2888:3888

#在dataDir目录下生成myid文件

echo 1 > /usr/local/zookeeper/data/myid #单机模式只有一台机器,故它是集群中的1号机器

#开启zookeeper集群节点

zkServer.sh start ##不生效就用: /usr/local/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

#检查集群状态或者节点角色

jps #出现QuorumPeerMain代表zookeeper正常运转

89138 NodeManager

90374 Jps

88701 SecondaryNameNode

90333 QuorumPeerMain

89004 ResourceManager

88335 NameNode

88478 DataNode

#单机模式,只有一种角色standalone

/usr/local/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: standalone

#关闭zookeeper集群节点

/usr/local/zookeeper/bin/zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPE

jps

89138 NodeManager

90983 Jps

88701 SecondaryNameNode

89004 ResourceManager

88335 NameNode

88478 DataNode

/usr/local/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Error contacting service. It is probably not running.

※4、安装Hbase单机模式

hbase安装

cd /usr/local/ && wget https://mirrors.tuna.tsinghua.edu.cn/apache/hbase/2.4.9/hbase-2.4.9-bin.tar.gz

#解压缩

tar xzvf hbase-2.4.9-bin.tar.gz

#重命名

mv hbase-2.4.9 hbase

#添加环境变量

vim /etc/profile

export HBASE_HOME=/usr/local/hbase #添加变量:

#在PATH变量后面添加::$HBASE_HOME/bin

##########################最终的环境变量(可以把之前添加的变量删除整份粘贴就好)

export JAVA_HOME=/usr/java/jdk1.8.0_251-amd64

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$JAVA_HOME/bin:$PATH

export HBASE_HOME=/usr/local/hbase

export PATH=$PATH:$HBASE_HOME/bin

export HADOOP_HOME=/usr/local/hadoop

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

ZOOKEEPER_HOME=/usr/local/zookeeper/

export PATH=$ZOOKEEPER_HOME/bin:$PATH

PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin

export JAVA_HOME HBASE_HOME HADOOP_HOME HBASE_HOME PATH

##########################

#刷新环境变量

source /etc/profile

#修改hbase配置目录下4个配置文件 在/usr/local/hbase/conf目录下

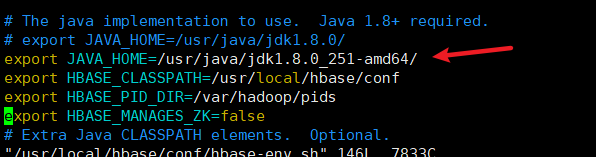

#1、cd /usr/local/hbase/conf/ && vim hbase-env.sh

#将以下4个变量取消注释,修改参数如下

export JAVA_HOME=/usr/java/jdk1.8.0_251-amd64/

export HBASE_CLASSPATH=/usr/local/hbase/conf

export HBASE_PID_DIR=/var/hadoop/pids

export HBASE_MANAGES_ZK=false #不使用HBase自带的zookeeper

#2、拷贝zoo.cfg

cd /usr/local/hbase/conf/

我们使用的不是HBase自带的zookeeper,而是之前已经装好的,所以需要将我们现在的zookeeper的zoo.cfg文件复制到hbase的conf目录下

cp /usr/local/zookeeper/conf/zoo.cfg /usr/local/hbase/conf/

#3、cd /usr/local/hbase/conf/ && vim hbase-site.xml (可以整份粘贴注意里面的端口和IP地址)

<configuration>

<!--

The following properties are set for running HBase as a single process on a

developer workstation. With this configuration, HBase is running in

"stand-alone" mode and without a distributed file system. In this mode, and

without further configuration, HBase and ZooKeeper data are stored on the

local filesystem, in a path under the value configured for `hbase.tmp.dir`.

This value is overridden from its default value of `/tmp` because many

systems clean `/tmp` on a regular basis. Instead, it points to a path within

this HBase installation directory.

Running against the `LocalFileSystem`, as opposed to a distributed

filesystem, runs the risk of data integrity issues and data loss. Normally

HBase will refuse to run in such an environment. Setting

`hbase.unsafe.stream.capability.enforce` to `false` overrides this behavior,

permitting operation. This configuration is for the developer workstation

only and __should not be used in production!__

See also https://hbase.apache.org/book.html#standalone_dist

-->

<property>

<name>hbase.rootdir</name>

<value>hdfs://0.0.0.0:9000/hbase</value>

<!--这个地方注意如果想改端口 提前在/usr/local/hadoop/etc/hadoop/core-site.xml 文件中改 -->

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master-node</value>

</property>

<property>

<name>hbase.zookeeper.property.clientPort</name>

<value>2181</value>

<description>zookeeper端口</description>

</property>

<property>

<name>hbase.regionserver.ipc.address</name>

<value>0.0.0.0</value>

</property>

<property>

<name>hbase.temp.dir</name>

<value> /var/hbase</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/usr/local/zookeeper/data</value>

</property>

<property>

<name>hbase.master.info.port</name>

<value>60000</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

</configuration>

#4、cat regionservers

localhost

#启动HBase。在master节点上运行

start-hbase.sh

#启动zookeeper

zkServer.sh start

#查看zookeeper状态以及角色

zkServer.sh status

#启动hadoop

start-all.sh

#启动Hbase

start-hbase.sh

jps

#HBase启动成功:显示HMaster和HRegionServer

#hadoop启动成功:显示NameNode、SecondaryNameNode、DataNode、ResourceManager和NodeManager

#zookeeper启动成功:显示QuorumPeerMain

89138 NodeManager

100306 Jps

99030 HRegionServer

91113 QuorumPeerMain

98889 HMaster

88701 SecondaryNameNode

89004 ResourceManager

88335 NameNode

88478 DataNode

#进入shell模式

hbase shell

hbase(main):001:0> status

1 active master, 0 backup masters, 1 servers, 0 dead, 12.0000 average load

Took 0.5168 seconds

#停止HBase,在master节点上运行

stop-hbase.sh

#关闭zookeeper

zkServer.sh stop

#关闭hadoop

stop-all.sh

#查看

jps

#遇到的问题

输入jps发现HRegionServer没起来,启动失败。解决方法是regionservers文件的值必须为localhost,输入主机名或IP均会导致启动失败

查看hbase状态发现 查询不到

hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2021-12-23 14:37:01,403 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell

Version 2.4.9, rf844d09157d9dce6c54fcd53975b7a45865ee9ac, Wed Oct 27 08:48:57 PDT 2021

Took 0.0044 seconds

hbase:001:0> status

ERROR: org.apache.hadoop.hbase.ipc.ServerNotRunningYetException: Server is not running yet

at org.apache.hadoop.hbase.master.HMaster.checkServiceStarted(HMaster.java:2817)

at org.apache.hadoop.hbase.master.MasterRpcServices.isMasterRunning(MasterRpcServices.java:1205)

at org.apache.hadoop.hbase.shaded.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:392)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:133)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:354)

at org.apache.hadoop.hbase.ipc.RpcExecutor$Handler.run(RpcExecutor.java:334)

For usage try 'help "status"'

Took 11.1160 seconds

hbase:002:0>

#查看日志发现

at org.apache.hadoop.hbase.io.asyncfs.FanOutOneBlockAsyncDFSOutputHelper$5.operationComplete(FanOutOneBlockAsyncDFSOutputHelper.java:477) at org.apache.hadoop.hbase.io.asyncfs.FanOutOneBlockAsyncDFSOutputHelper$5.operationComplete(FanOutOneBlockAsyncDFSOutputHelper.java:472)

#解决方案在vim /usr/local/hbase/conf/hbase-site.xml 增加下面一段

<property>

<name>hbase.wal.provider</name>

<value>filesystem</value>

</property>

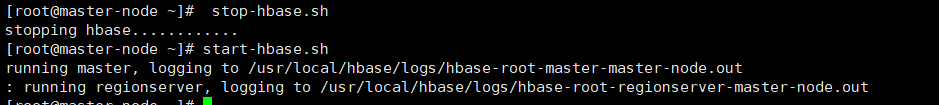

#重启hbase

stop-hbase.sh

start-hbase.sh

hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2021-12-23 15:30:10,560 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

For Reference, please visit: http://hbase.apache.org/2.0/book.html#shell

Version 2.4.9, rf844d09157d9dce6c54fcd53975b7a45865ee9ac, Wed Oct 27 08:48:57 PDT 2021

Took 0.0046 seconds

hbase:001:0> status

1 active master, 0 backup masters, 1 servers, 0 dead, 2.0000 average load

Took 1.7662 seconds

#成功

报错1:

这个问题是这个jar包重复了

Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]

Found binding in [jar:file:/usr/local/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]

删除 :/usr/local/hbase/lib/client-facing-thirdparty/slf4j-log4j12-1.7.30.jar 这个jar包 不能删除/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.30.jar这个jar包

不然就会得到

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

正确的是

报错2:JAVA_HOME is not set and Java could not be found

解决办法

hbase下conf文件下的hbase-env.sh文件中的java_home的环境变量没有配置或者是被注释了

这样就可以了

命令参数

重启hdoop

stop-all.sh

start-all.sh

重启zookeeper

zkServer.sh stop

zkServer.sh start

重启hbase

stop-hbase.sh

start-hbase.sh

start-all.sh && zkServer.sh start && start-hbase.sh

stop-all.sh && zkServer.sh stop && stop-hbase.sh

# hbase shell

hbase(main):001:0> status

都是一些hbase报错的解决方案

https://www.w3cschool.cn/hbase_doc/hbase_doc-6yxp2ze8.html