| ip | 主机名 | column3 | 配置 |

|---|---|---|---|

| 192.168.167.120 | ELK01 | Elasticsearch、Logstash | 内存最少2G以上 |

| 192.168.167.121 | ELK02 | Elasticsearch、Kibana | 内存最少2G以上 |

| 192.168.167.122 | ELK03 | Elasticsearch、Logstash | 内存最少2G以上 |

| 192.168.167.120 | Filebeat | Filebeat | 内存最少2G以上 |

安装包:附件库搜索ELK安装包.zip

系统初始化

swapoff -a

sed -i.bak '/swap/s/^/#/' /etc/fstab

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时

systemctl disable firewalld

systemctl stop firewalld

yum install -y ntpdate vim net-tools lrzsz

/usr/sbin/ntpdate -u time.windows.com >/dev/null 2>&1 && clock -w >/dev/null 2>&1

cat /etc/sysctl.conf

net.ipv4.tcp_max_tw_buckets = 6000

net.ipv4.ip_local_port_range = 1024 65000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_fin_timeout = 10

net.ipv4.tcp_syncookies = 1

net.core.netdev_max_backlog = 262144

net.ipv4.tcp_max_orphans = 262144

net.ipv4.tcp_max_syn_backlog = 262144

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_synack_retries = 1

net.ipv4.tcp_syn_retries = 1

net.ipv4.tcp_keepalive_time = 30

net.ipv4.tcp_mem= 786432 2097152 3145728

net.ipv4.tcp_rmem= 4096 4096 16777216

net.ipv4.tcp_wmem= 4096 4096 16777216

cat /etc/security/limits.conf

* soft nofile 65536

* hard nofile 131072

* soft nproc 2048

* hard nproc 4096

* soft memlock unlimited

* hard memlock unlimited

sysctl -p

安装elasticsearch(3台都要安装)

rpm -ivh jdk-8u251-linux-x64.rpm

rpm -ivh elasticsearch-6.8.9.rpm

mkdir -p /data/elk/elasticsearch && chown -R elasticsearch. /data/elk/elasticsearch

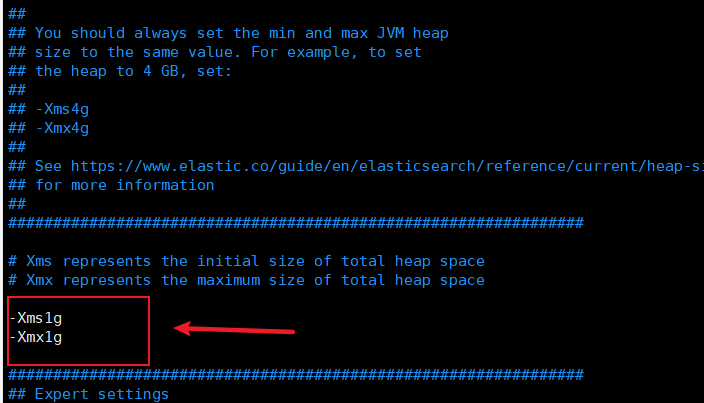

vim /etc/elasticsearch/jvm.options (根据机器配置和数据量分配内存)

-Xms1g

-Xmx1g

#参考下面图一

cat /etc/elasticsearch/elasticsearch.yml

elasticsearch配置文件:

cluster.name: DDM-ELK

node.name: node-1 --修改

path.data: /data/elk/elasticsearch

path.logs: /var/log/elasticsearch

node.master: false #192.168.167.120 192.168.167.121 配置为true,其它的es配置为false

node.data: true

bootstrap.memory_lock: false

bootstrap.system_call_filter: false

indices.fielddata.cache.size: 40%

network.host: 192.168.1.196 ---修改

#discovery.zen.minimum_master_nodes:如果是2那就是两台master,node.master这个参数就需要写两个true 、一个false。以三台集群为例

#discovery.zen.minimum_master_nodes:如果是1那就是一台master,node.master这个参数需要1个true、两个false 。以三台集群为例

discovery.zen.minimum_master_nodes: 2 #----根据master数量修改,master节点数/2+1 -防止脑裂

http.port: 9200

discovery.zen.ping.unicast.hosts: ["192.168.167.120","192.168.167.121","192.168.167.122"]

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.ssl.key: DDM-ELK.key

xpack.ssl.certificate: DDM-ELK.crt

xpack.ssl.certificate_authorities: ca.crt

#master

cat /etc/elasticsearch/elasticsearch.yml

elasticsearch配置文件:

cluster.name: DDM-ELK # 集群中的名称

node.name: master # 该节点名称

path.data: /data/elk/elasticsearch #数据存储目录

path.logs: /var/log/elasticsearch #日志存储目录

node.master: true # 意思是该节点为主节点 false ------167.120 167.120 配置为true,其它的es配置为false

node.data: true # 表示这是数据节点

bootstrap.memory_lock: false # es使用swap交换分区

bootstrap.system_call_filter: false

indices.fielddata.cache.size: 40% # 用于排序和筛选的缓存大小,建议不少于10g,内存大小的 10% ~ 40%

network.host: 192.168.167.120 # 绑定ip

discovery.zen.minimum_master_nodes: 2 #根据master数量修改,master节点数/2+1 -----防止脑裂

http.port: 9200 # es服务的端口号

discovery.zen.ping.unicast.hosts: ["192.168.167.120","192.168.167.121","192.168.167.122"] #配置自动发现

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.ssl.key: DDM-ELK.key

xpack.ssl.certificate: DDM-ELK.crt

xpack.ssl.certificate_authorities: ca.crt

#node1

cat /etc/elasticsearch/elasticsearch.yml

elasticsearch配置文件:

cluster.name: DDM-ELK # 集群中的名称

node.name: node-1 # 该节点名称

path.data: /data/elk/elasticsearch #数据存储目录

path.logs: /var/log/elasticsearch #日志存储目录

node.master: false # 意思是该节点为从节点

node.data: true # 表示这是数据节点

bootstrap.memory_lock: false # es使用swap交换分区

bootstrap.system_call_filter: false

indices.fielddata.cache.size: 40% # 用于排序和筛选的缓存大小,建议不少于10g,内存大小的 10% ~ 40%

network.host: 192.168.167.121 # 绑定ip

discovery.zen.minimum_master_nodes: 2 #根据master数量修改,master节点数/2+1 -----防止脑裂

http.port: 9200 # es服务的端口号

discovery.zen.ping.unicast.hosts: ["192.168.167.120","192.168.167.121","192.168.167.122"] #配置自动发现

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.ssl.key: DDM-ELK.key

xpack.ssl.certificate: DDM-ELK.crt

xpack.ssl.certificate_authorities: ca.crt

#node2

cat /etc/elasticsearch/elasticsearch.yml

elasticsearch配置文件:

cluster.name: DDM-ELK # 集群中的名称

node.name: node-2 # 该节点名称

path.data: /data/elk/elasticsearch #数据存储目录

path.logs: /var/log/elasticsearch #日志存储目录

node.master: false # 意思是该节点为从节点

node.data: true # 表示这是数据节点

bootstrap.memory_lock: false # es使用swap交换分区

bootstrap.system_call_filter: false

indices.fielddata.cache.size: 40% # 用于排序和筛选的缓存大小,建议不少于10g,内存大小的 10% ~ 40%

network.host: 192.168.167.122 # 绑定ip

discovery.zen.minimum_master_nodes: 2 #根据master数量修改,master节点数/2+1 -----防止脑裂

http.port: 9200 # es服务的端口号

discovery.zen.ping.unicast.hosts: ["192.168.167.120","192.168.167.121","192.168.167.122"] #配置自动发现

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.ssl.key: DDM-ELK.key

xpack.ssl.certificate: DDM-ELK.crt

xpack.ssl.certificate_authorities: ca.crt

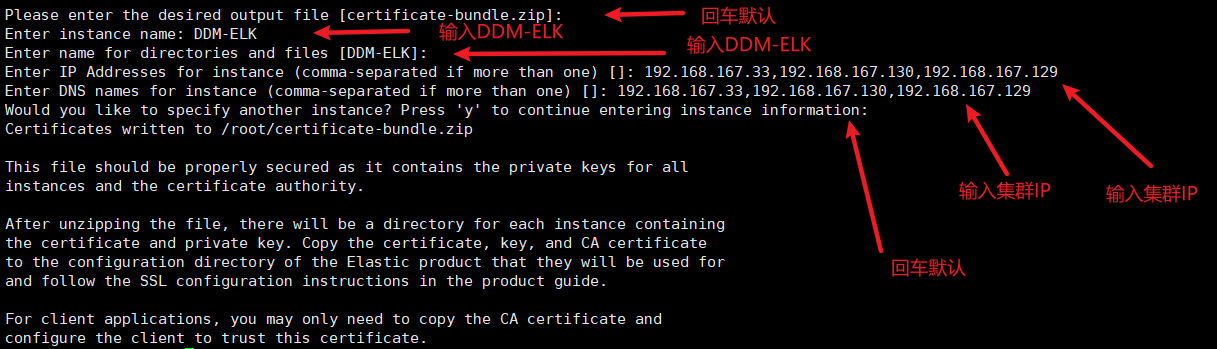

在ELK01生成证书

/usr/share/elasticsearch/bin/elasticsearch-certgen

1、证书保存文件名默认 ---回车默认

2、集群名称 DDM-ELK ---输入集群名称

3、证书创建文件夹 默认和集群名称相同 ---输入集群名称

4、节点IP 192.168.167.120,192.168.167.121,192.168.167.122 ---输入集群IP

5、节点名称 192.168.167.120,192.168.167.121,192.168.167.122 ---输入集群IP

6、是否还有其它实例 没有直接回车

拷贝证书到ELK01、ELK02、ELK03

#在当前目录解压证书压缩包

unzip certificate-bundle.zip

cp ca/ca.crt DDM-ELK/DDM-ELK.crt DDM-ELK/DDM-ELK.key /etc/elasticsearch/

#比较文件是否一样

cksum DDM-ELK/DDM-ELK.key && cksum /etc/elasticsearch/DDM-ELK.key

systemctl daemon-reload

systemctl restart elasticsearch.service

systemctl status elasticsearch.service

systemctl enable elasticsearch.service

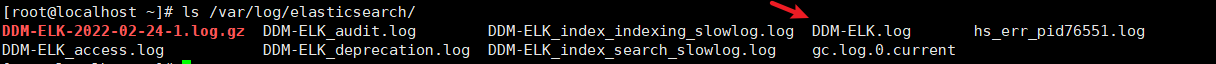

#日志

ls /var/log/elasticsearch/

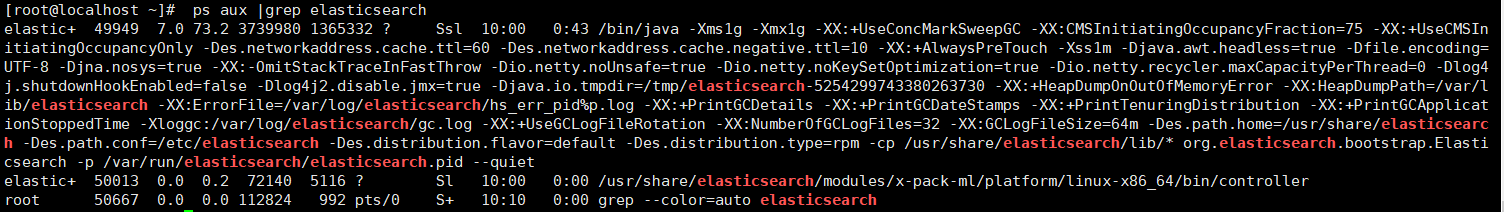

ps aux |grep elasticsearch

# es服务会监听两个端口

netstat -lntp |grep java

9300端口是集群通信用的,9200则是数据传输时用的

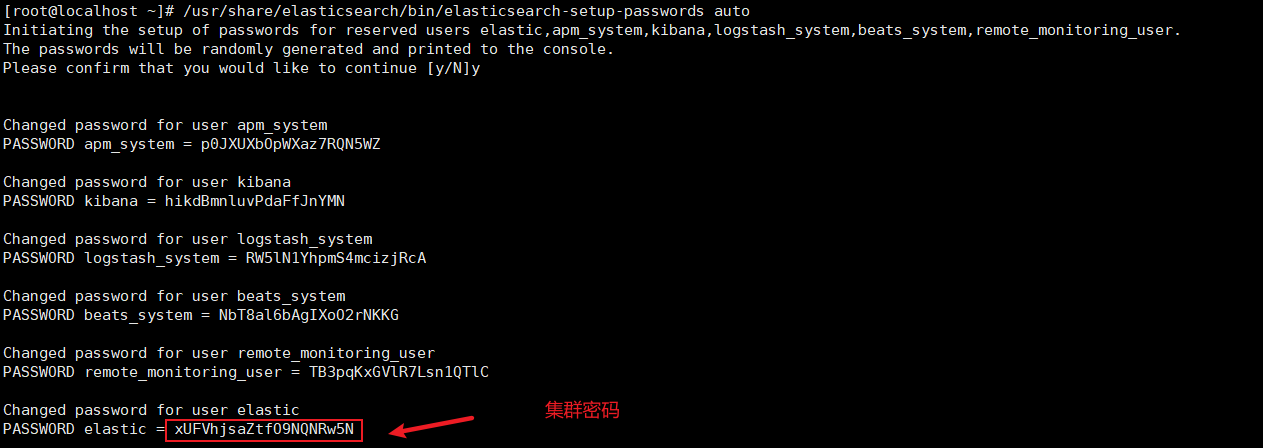

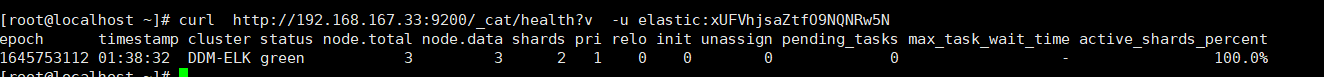

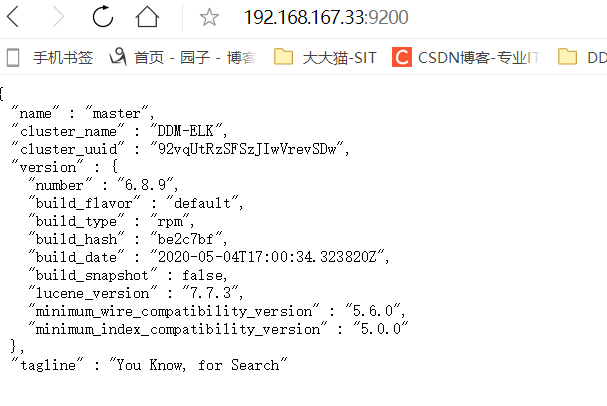

在ELK01生成账号密码并测试

/usr/share/elasticsearch/bin/elasticsearch-setup-passwords auto

curl http://192.168.167.120:9200/_cat/health?v -u elastic:密码

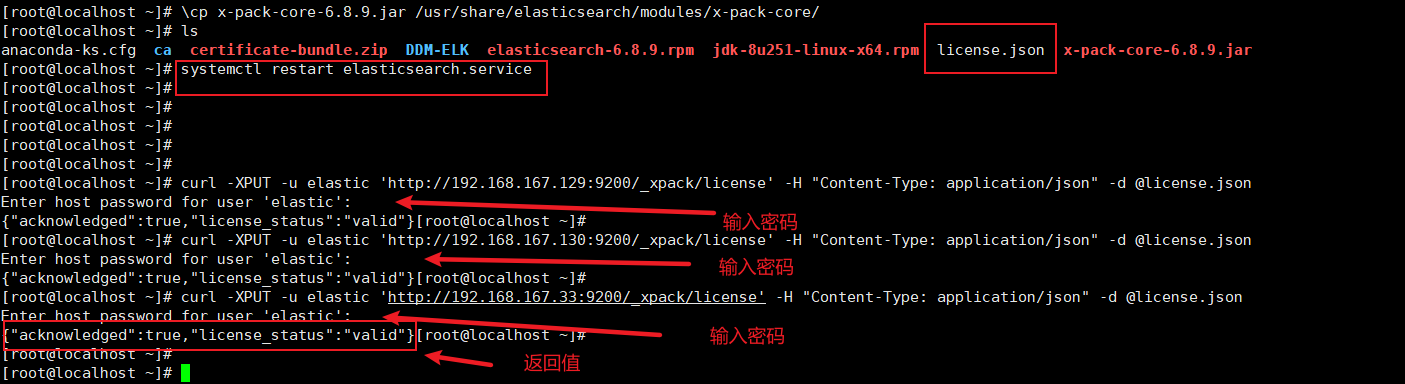

破解x-pack 每台机器操作

#上传两个文件

#x-pack-core-6.8.9.jar 和license.json

cp x-pack-core-6.8.9.jar /usr/share/elasticsearch/modules/x-pack-core/

systemctl restart elasticsearch

curl -XPUT -u elastic 'http://192.168.167.120:9200/_xpack/license' -H "Content-Type: application/json" -d @license.json

curl -XPUT -u elastic 'http://192.168.167.121:9200/_xpack/license' -H "Content-Type: application/json" -d @license.json

curl -XPUT -u elastic 'http://192.168.167.122:9200/_xpack/license' -H "Content-Type: application/json" -d @license.json

配置logstash (按照规划在192.168.167.120、192.168.167.122上安装)

#192.168.167.120

rpm -ivh logstash-6.8.9.rpm

mkdir /data/elk/logstash -p && chown -R logstash. /data/elk/logstash

##############################################################################################

cat >/etc/logstash/logstash.yml <<EOF

path.data: /data/elk/logstash

node.name: logstash_node1

http.host: "192.168.167.156"

path.logs: /var/log/logstash

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: elastic

xpack.monitoring.elasticsearch.password: 密码

xpack.monitoring.elasticsearch.hosts: ["192.168.167.120:9200","192.168.167.121:9200","192.168.167.122:9200"]

xpack.monitoring.elasticsearch.sniffing: false

xpack.monitoring.collection.interval: 30s

xpack.management.enabled: true

xpack.management.pipeline.id: ["test_pieline"]

xpack.management.elasticsearch.username: elastic

xpack.management.elasticsearch.password: 密码

xpack.management.elasticsearch.hosts: ["192.168.167.120:9200","192.168.167.121:9200","192.168.167.122:9200"]

EOF

##############################################################################################

systemctl enable logstash.service

systemctl start logstash.service

netstat -lntp |grep 9600

#192.168.167.122

rpm -ivh logstash-6.8.9.rpm

mkdir /data/elk/logstash -p && chown -R logstash. /data/elk/logstash

##############################################################################################

cat >/etc/logstash/logstash.yml <<EOF

path.data: /data/elk/logstash

node.name: logstash_node2

http.host: "192.168.167.156" #更改

path.logs: /var/log/logstash

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: elastic

xpack.monitoring.elasticsearch.password: #填写密码

xpack.monitoring.elasticsearch.hosts: ["192.168.167.120:9200","192.168.167.121:9200","192.168.167.122:9200"]

xpack.monitoring.elasticsearch.sniffing: false

xpack.monitoring.collection.interval: 30s

xpack.management.enabled: true

xpack.management.pipeline.id: ["test_pieline"]

xpack.management.elasticsearch.username: elastic

xpack.management.elasticsearch.password: 密码

xpack.management.elasticsearch.hosts: ["192.168.167.120:9200","192.168.167.121:9200","192.168.167.122:9200"]

EOF

##############################################################################################

systemctl enable logstash.service

systemctl start logstash.service

netstat -lntp |grep 9600

安装配置kibana (按照规划在192.168.167.121上安装)

rpm -ivh kibana-6.8.9-x86_64.rpm

cat /etc/kibana/kibana.yml

server.port: 5601

server.host: "192.168.167.121"

server.name: "ddm-Kibana"

elasticsearch.hosts: ["http://192.168.167.121:9200"]

i18n.locale: "zh-CN"

elasticsearch.username: "elastic"

elasticsearch.password: "密码"

systemctl enable kibana

systemctl start kibana

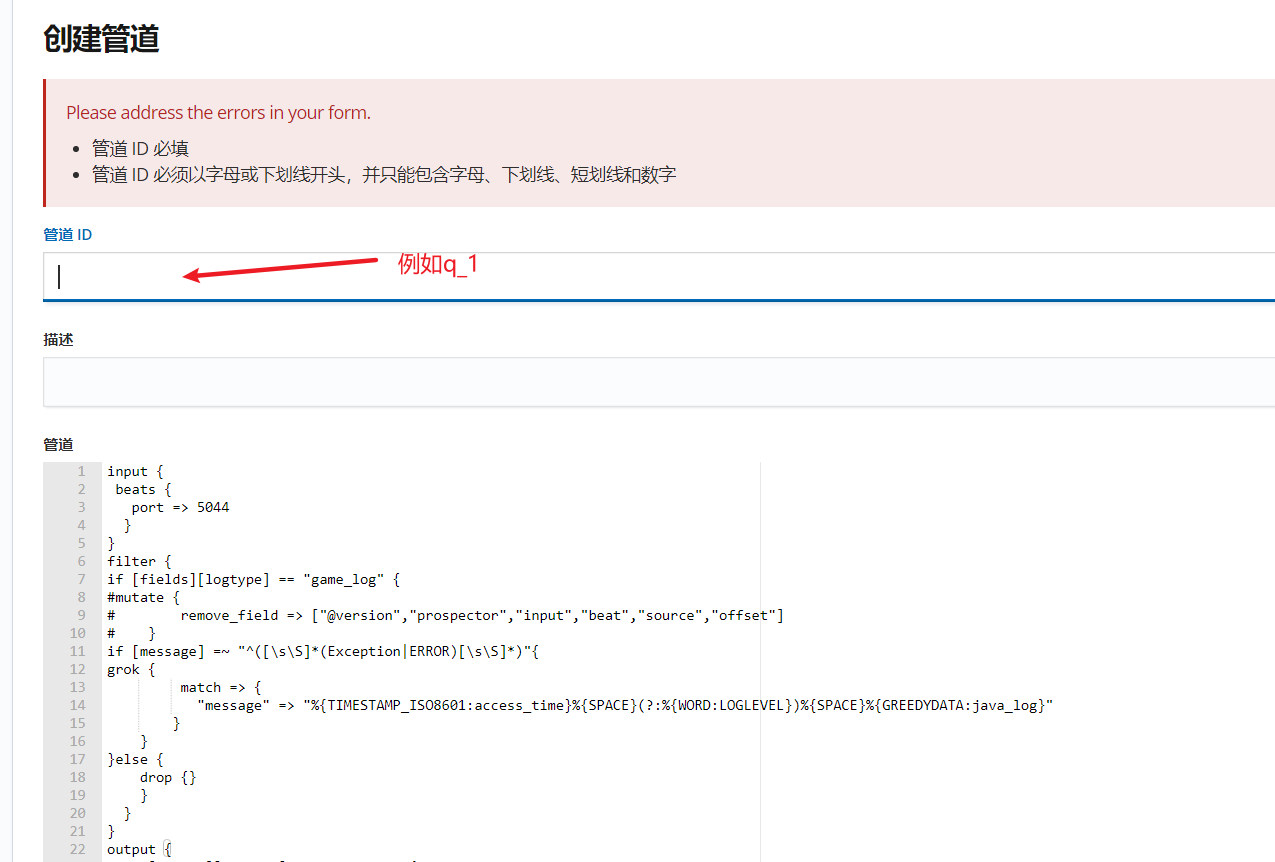

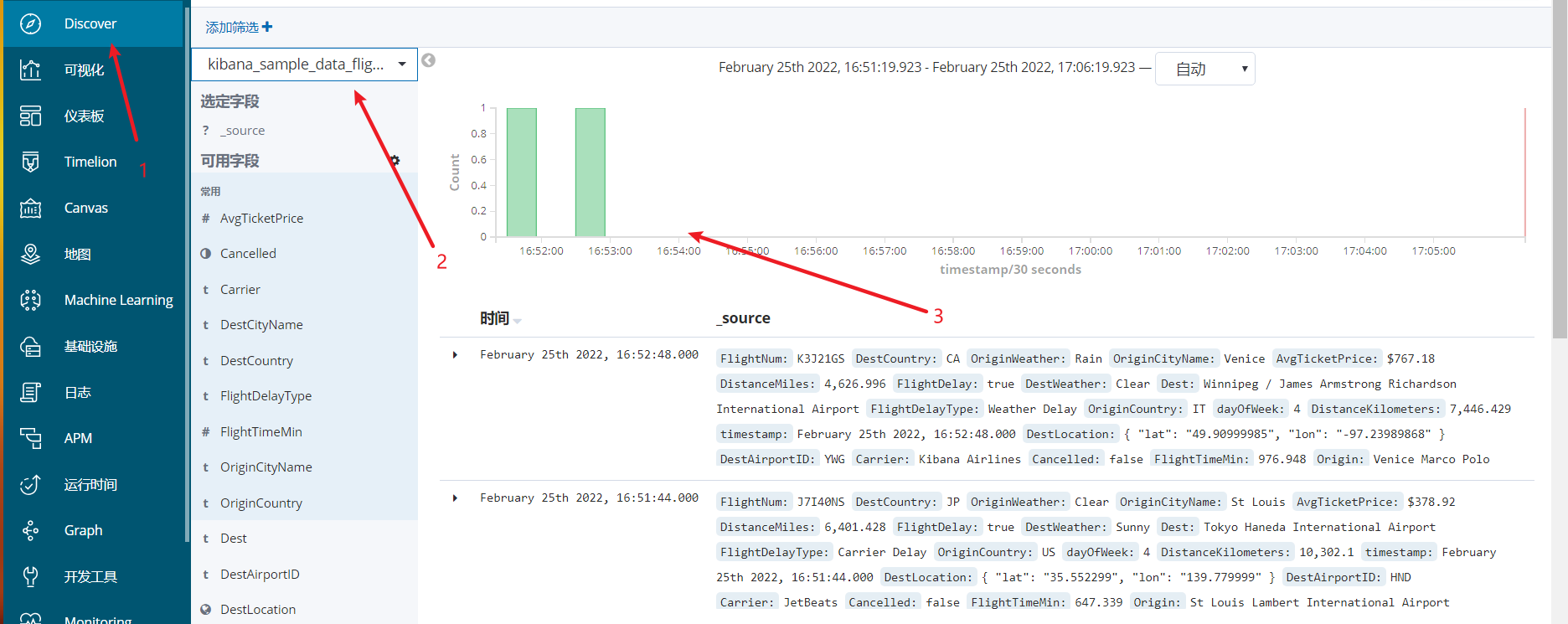

Kibana UI端配置Logstatsh管道

input {

beats {

port => 5044

}

}

filter {

if [fields][logtype] == "game_log" {

#mutate {

# remove_field => ["@version","prospector","input","beat","source","offset"]

# }

if [message] =~ "^([\s\S]*(Exception|ERROR)[\s\S]*)"{

grok {

match => {

"message" => "%{TIMESTAMP_ISO8601:access_time}%{SPACE}(?:%{WORD:LOGLEVEL})%{SPACE}%{GREEDYDATA:java_log}"

}

}

}else {

drop {}

}

}

}

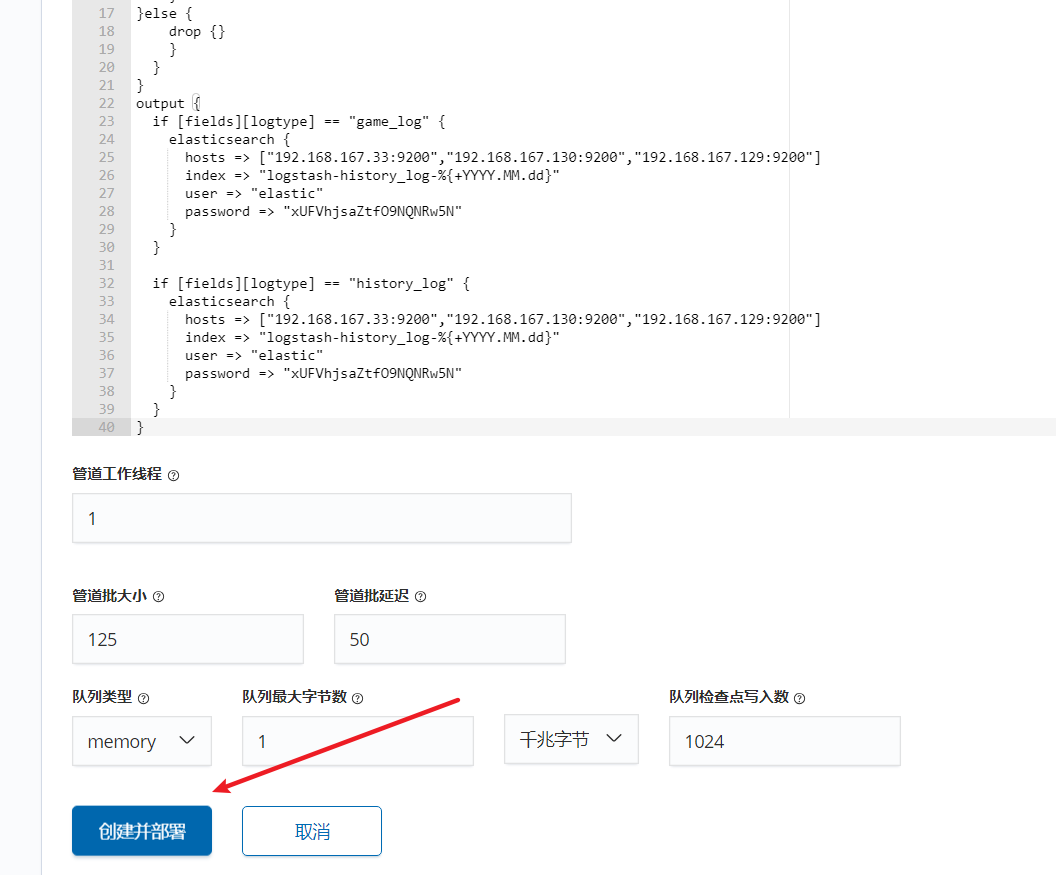

output {

if [fields][logtype] == "game_log" {

elasticsearch {

hosts => ["192.168.167.120:9200","192.168.167.121:9200","192.168.167.122:9200"]

index => "logstash-history_log-%{+YYYY.MM.dd}"

user => "elastic"

password => "密码"

}

}

if [fields][logtype] == "history_log" {

elasticsearch {

hosts => ["192.168.167.120:9200","192.168.167.121:9200","192.168.167.122:9200"]

index => "logstash-history_log-%{+YYYY.MM.dd}"

user => "elastic"

password => "密码"

}

}

}

安装Filebeat

rpm -ivh filebeat-6.8.9-x86_64.rpm

cat /etc/filebeat/filebeat.yml

filebeat.prospectors:

- input_type: log

paths:

- /data/appsgameh5-cg/PTG0001/log/logInfo/all.log

document_type: "game_log"

multiline:

pattern: '^\d{4}-\d{1,2}-\d{1,2}'

negate: true

match: after

max_lines: 1000

timeout: 30s

fields:

logsource: CG01_JAR1_PTG0001

logtype: game_log

output.logstash:

hosts: ["192.168.167.120:5044"]

systemctl enable filebeat

systemctl start filebeat

增加Elasticsearch 节点(新机器全新安装)

#安装软件

rpm -ivh jdk-8u251-linux-x64.rpm

rpm -ivh elasticsearch-6.8.9.rpm

vi /etc/elasticsearch/elasticsearch.yml

vim jvm.options #设置内存

mkdir -p /data/elk/elasticsearch && chown -R elasticsearch. /data/elk/elasticsearch

#拷贝证书

cp cert.zip /etc/elasticsearch && unzip cert.zip && cp cert/* . && rm cert -rf

#全部节点重新破解xpack

cp x-pack-core-6.8.9.jar /usr/share/elasticsearch/modules/x-pack-core/

curl -XPUT -u elastic 'http://192.168.101.2:9200/_xpack/license' -H "Content-Type: application/json" -d @license.json

#启动服务

systemctl daemon-reload

systemctl restart elasticsearch

增加Elasticsearch 节点(旧环境节点推掉重做)

停止elasticsearch 服务

systemctl stop elasticsearch

删除新节点数据目录

cd /data/elk/elasticsearch && rm nodes/ -rf

mkdir -p /data/elk/elasticsearch && chown -R elasticsearch. /data/elk/elasticsearch

#拷贝证书

cp cert.zip /etc/elasticsearch && unzip cert.zip && cp cert/* . && rm cert -rf

#全部节点重新破解xpack

cp x-pack-core-6.8.9.jar /usr/share/elasticsearch/modules/x-pack-core/

curl -XPUT -u elastic 'http://192.168.101.2:9200/_xpack/license' -H "Content-Type: application/json" -d @license.json

#启动服务

systemctl daemon-reload

systemctl restart elasticsearch

常用命令

测试集群是否正常

curl http://192.168.167.120:9200/_cat/health?v -u elastic:密码

遇到的问题

新节点无法加入集群无法启动 提示client did not trust this server's certificate, closing connection Netty4TcpChannel{localAddress=0.0.0.0/0.0.0.0:9300, remoteAddress=/172.16.3.137:54781}

原因:在生成证书的时候未把新节点的IP 绑定到证书里面

解决办法:

重新签发证书

#在一台节点生成SSL证书

/usr/share/elasticsearch/bin/elasticsearch-certgen

1、证书保存文件名默认

2、集群名称 DDM-ELK

3、证书创建文件夹 默认和集群名称相同

4、节点IP 192.168.101.5,192.168.101.3,192.168.101.4,192.168.101.6,192.168.101.2

5、节点名称 192.168.101.5,192.168.101.3,192.168.101.4,192.168.101.6,192.168.101.2

6、是否还有其它实例 没有直接回车

#拷贝证书分发到所有节点

cp cert.zip /etc/elasticsearch && unzip cert.zip && cp cert/* . && rm cert -rf

#全部节点重新破解xpack

cp x-pack-core-6.8.9.jar /usr/share/elasticsearch/modules/x-pack-core/

curl -XPUT -u elastic 'http://192.168.101.2:9200/_xpack/license' -H "Content-Type: application/json" -d @license.json

#启动服务

systemctl daemon-reload

systemctl restart elasticsearch